Artificial Intelligence (AI) has become an integral part of our daily lives, transforming the way we work, communicate, and interact with the world. From voice assistants like Siri and Alexa to recommendation algorithms on Netflix and Amazon, AI is everywhere. It’s helping doctors diagnose diseases, enabling businesses to provide personalized customer experiences, and even driving autonomous vehicles.

However, as AI continues to evolve and become more sophisticated, it’s crucial that we use it responsibly. Responsible use of AI means ensuring that AI systems are transparent, fair, and don’t infringe on human rights. It means understanding the limitations of AI, protecting user data, and being aware of the ethical implications of AI use.

In this blog post, we’ll explore The “TRUST IN AI” Framework to use AI responsibly every time, providing you with practical guidelines and best practices. Whether you’re an AI professional, a business owner, or a casual user, this post will equip you with the knowledge you need to navigate the world of AI ethically and responsibly.

Understanding AI

Artificial Intelligence, or AI, is a field of computer science that aims to create machines that mimic human intelligence. This could be anything from recognizing speech to learning, planning, problem-solving, or even perception. AI can be categorized into two main types:

Narrow AI

- Narrow AI, also known as artificial narrow intelligence (ANI) or weak AI, refers to artificial intelligence systems that are designed to perform a specific task or handle a limited set of tasks.

- It is the opposite of strong AI, which is capable of handling a wide range of tasks. Narrow AI systems are designed to perform a single task, such as playing chess, facial recognition, analyzing big data, or acting as a virtual assistant.

- These are commonly used in systems like Siri, Alexa, or Google Assistant.

General AI

General AI, also called Strong AI, is like a super-smart computer. It can do all the things a person can do, like thinking, learning, and using what it knows in many different areas. But right now, we mostly talk about it in books and labs, not in real life.

AI works by combining large amounts of data with fast, iterative processing and intelligent algorithms. This lets the software learn by itself from patterns and features in the data. A common method of AI is machine learning, where algorithms are used to allow computers to learn and improve from experience.

✅ How to Use Chat GPT to Study for Exams: Complete Guide

Use of AI in everyday life

Artificial Intelligence (AI) is increasingly being used in various aspects of our daily lives. Here are a few ways AI is commonly used:

- Personal Assistants: AI powers personal assistants like Siri, Alexa, and Google Assistant to understand your speech and carry out tasks for you.

- Recommendation Systems: AI helps recommend what you might like to watch (Netflix) or buy (Amazon) based on your past behaviour.

- Autonomous Vehicles: AI is used in self-driving cars to perceive the environment and make decisions.

- Healthcare: In healthcare, AI predicts diseases, helps diagnose illnesses, and tailors treatment plans to individual patients.

- Smart Home Devices: AI enables smart thermostats to automatically adjust your home’s HVAC system, while cameras can alert consumers to the presence of people, cars or packages.

- eCommerce: AI is used in online shopping to suggest products, manage sales and returns, and provide customized shopping experiences.

- Trend Identification in Retail: Online stores use AI to identify trends based on what’s selling in their store and their competitors.

- Content Recommendations: AI-powered content recommendation engines deliver more personalized recommendations.

- Facial Recognition to Unlock Phones: AI takes advantage of camera and sensor technology to accurately measure your face.

- Financial Fraud Detection: AI is good at pattern analysis, which makes it well suited for determining which credit card transactions may be illicit.

- Taxi Booking Apps: AI analyzes historical data to allocate drivers more efficiently and suggest the fastest and most efficient routes.

These are just a few examples. AI technologies are penetrating nearly every aspect of our lives, almost imperceptibly. They power our devices while continuously improving by analyzing data we produce on those devices. AI and Machine Learning (ML) algorithms are being increasingly employed in various industries and businesses to aid in repetitive processes. AI has been highly instrumental in optimizing the way we entertain ourselves, interact with our mobile devices, and even drive vehicles for us. We tend to encounter ML algorithms and Natural Language Processing (NLP) in several everyday tasks more than we know.

Understanding AI and how it works is the first step towards using it responsibly. As we continue to integrate AI into our lives, it’s important to understand its potential and its pitfalls.

✅ Mastering ChatGPT Prompts for Writing Social Media Captions: Complete Guide

The Importance of Using AI Responsibly

The use of Artificial Intelligence (AI) has become increasingly prevalent in our daily lives. However, with its widespread adoption comes a responsibility to ensure that it is used ethically and responsibly. Here are some key points on this topic:

AI has the potential to enhance our lives greatly, but it also poses significant ethical and societal challenges. The competitive nature of AI development can lead organizations to prioritize speed over ethical guidelines, bias detection, and safety measures.

This can result in the spread of misinformation, copyright and intellectual property concerns, cybersecurity issues, and data privacy violations. To mitigate these risks, organizations need to ensure that ethical principles are embedded in the design and deployment of AI systems.

Potential Risks and Ethical Considerations

AI systems can influence human decision-making processes, leading to ethical challenges related to automation, decision-making, and transparency. Biases in AI typically arise from the training data used. If the training data contains historical prejudices or lacks representation from diverse groups, then the AI system’s output is likely to reflect and perpetuate those biases. This can lead to unfair treatment and discrimination in crucial areas such as hiring, lending, criminal justice, and resource allocation.

Real-World Examples of AI Misuse

There have been several high-profile cases of AI misuse. For instance, Air Canada was ordered to pay damages to a passenger after its virtual assistant gave him incorrect information. In another case, a chatbot set up to help small firms in New York started advising business owners to break the law.

✅Read the full article on APNEWS regarding the incident of incorrect information provided by a virtual assistant.

There have also been instances where AI systems have been accused of racial bias, such as an AI algorithm trained primarily on images of white people, leading to discrimination and racial bias.

In conclusion, while AI has the potential to bring about significant benefits, it is crucial that it is used responsibly. This involves ensuring that AI systems are transparent and fair and do not perpetuate harmful biases. It also involves holding organizations accountable for the misuse of AI and ensuring that adequate regulations are in place to prevent such misuse.

✅ Prompt Engineering – Do’s and Don’ts: A Handy Checklist with Complete Guide

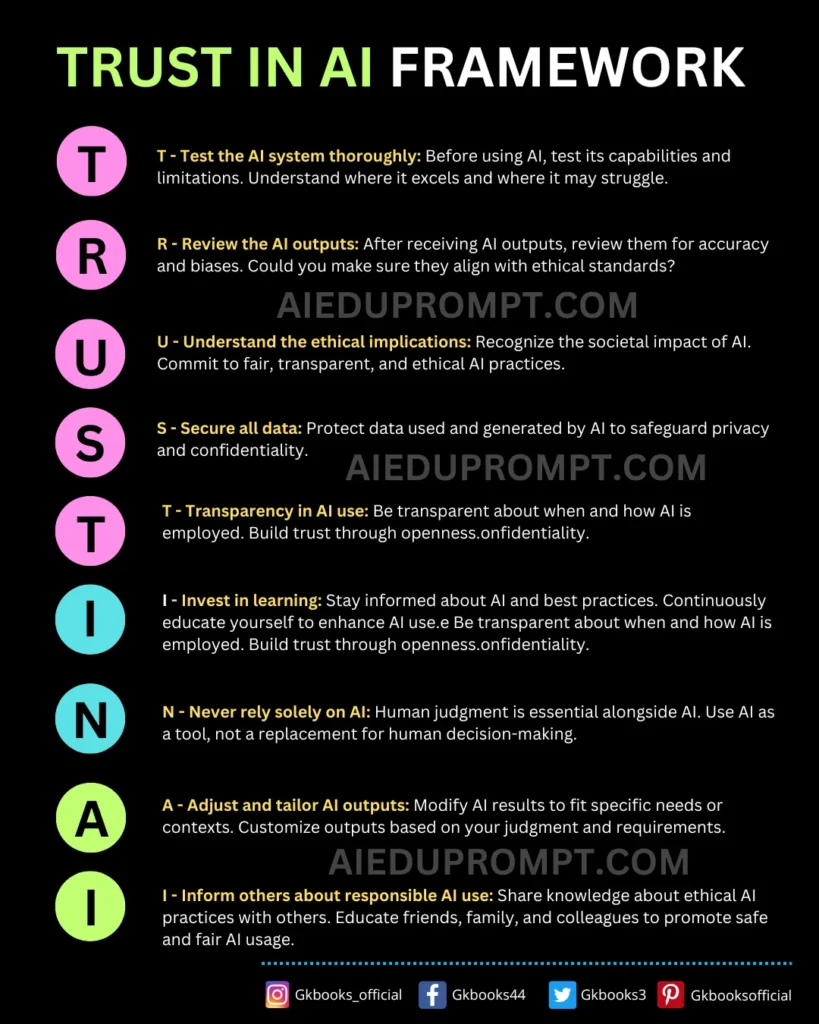

Guidelines for Responsible AI Use: TRUST IN AI Framework

The “TRUST IN AI” Framework is a comprehensive guideline for the responsible use of Artificial Intelligence (AI). It provides a conceptual, theoretical, and methodological foundation for trust research in general, and trust in AI, specifically.

T-TEST

T – Test the AI system thoroughly to understand its capabilities and limitations.

This means that before you start using an AI system, it’s essential to spend some time testing it out. This could involve running a variety of tasks, asking different types of questions, or using it in various scenarios. The goal is to get a clear understanding of what the AI system can do well, and where it might struggle or fail.

For example, suppose you’re using an AI tool for language translation. In that case, you might test it with different languages, dialects, and types of text (like formal letters, casual conversations, technical documents, etc.) to see how accurately it translates in each case.

By doing this, you can learn the AI system’s strengths and weaknesses. This knowledge can help you use the system more effectively and avoid potential problems. For instance, if you know that the translation tool struggles with a particular dialect, you can be cautious while using it for that dialect or seek human assistance.

Remember, no AI system is perfect. They all have their limitations. Testing helps you understand these limitations and set realistic expectations for what the AI can achieve. It also helps you to use the AI system responsibly, as you’re less likely to misuse it or misinterpret its outputs.

R-REVIEW

R – Review the AI outputs for any inaccuracies or biases.

This means that after you receive an output from an AI system, you should carefully review it to ensure its accuracy and check for any potential biases.

AI systems learn by analyzing the data they are trained on. If the training data contains inaccuracies or biases, the AI system can also produce biased or inaccurate results.

For example, if an AI system is trained on data that underrepresents a certain demographic group, it may not perform as well when making predictions or decisions about that group.

Reviewing the AI outputs involves checking the results against known facts or reliable sources and being aware of any potential biases in the data the AI was trained on. It’s also important to consider whether any biases might influence the AI system’s output in the way it was designed or programmed.

For instance, if you’re using an AI tool to screen job applications, you should review the results to ensure that qualified candidates are not being unfairly excluded based on factors like gender, race, or age.

By regularly reviewing the AI outputs, you can identify and correct errors or biases, which helps ensure that you’re using AI responsibly and ethically.

U-UNDERSTAND

U – Understand the ethical implications of using AI and commit to using it responsibly.

Understanding the ethical implications of using AI involves recognizing the potential impact AI can have on society and individuals. It’s about ensuring that AI is developed and used in a way that is fair, transparent, and respects the rights and freedoms of all people.

Let’s say a company uses an AI system for hiring. The ethical use of AI would require the company to ensure that the AI does not discriminate against applicants based on gender, race, or age.

This means the AI should be trained on diverse data sets and regularly audited for biases. If the AI inadvertently learns to prefer candidates from a certain university or with a certain type of experience, it could unfairly disadvantage qualified candidates who don’t fit that profile. The company must understand these implications and take steps to prevent such biases, promoting a responsible use of AI in hiring practices.

S – SECURE

S – Secure all data used and generated by AI to protect privacy and confidentiality.

This means that when you use AI, you need to make sure that any information or data it uses or creates is kept safe. This is especially important if the data is private or confidential, like someone’s personal details or a company’s secret information.

For example, let’s say you’re using an AI health app that tracks your daily steps and heart rate. This app collects personal data about your health. This data must be kept secure so that it doesn’t fall into the wrong hands. If someone else got hold of this data, they could use it in ways that you don’t want, like selling it to a company that uses it for targeted advertising.

Similarly, if you’re a business using AI to process customer orders, you have access to confidential information, such as customers’ names, addresses, and payment details. You must secure this data to protect your customers’ privacy and trust.

In simple terms, this point is all about keeping data safe. When you use AI, you should always ensure that any data it uses or creates is stored securely and used responsibly.

T-TRANSPARENCY

T – Transparency should be maintained about how and when AI is used.

This means that when you use AI, you should be open and transparent about it. People should know when an AI is being used and what it’s being used for. This is what we mean by “transparency.”

For example, let’s say you’re using an AI chatbot on your website to answer customer questions. It’s important to let your customers know that they’re interacting with an AI, not a human. This could be as simple as having the chatbot introduce itself as an AI when the chat starts.

Or, if you’re a company using AI to sort through job applications, you should let applicants know that an AI is being used. This way, they understand how their application is being evaluated.

Being transparent about how and when AI is used helps build trust. It allows people to make informed decisions about how they interact with the AI. So, in simple terms, this point is all about being open and honest about using AI.

I-INVEST

I – Invest time in learning about AI and staying updated on best practices.

This means that you should spend time learning about AI and how it works. It’s also important to keep up-to-date with the latest ways to use AI responsibly and effectively. This is what we mean by “best practices.”

For example, let’s say you’re a business owner who uses AI to help run your online store. You might spend time each week reading articles, attending webinars, or taking online courses about AI. This could help you learn about new AI tools that could improve your store, or about ethical issues that you need to be aware of.

Or, if you’re a teacher using AI to help grade student assignments, you might attend a workshop on using AI in education. This could help you learn about the best ways to use AI in your classroom and how to do so in a way that’s fair and beneficial for your students.

In simple terms, this point is all about learning and staying informed. When using AI, you should always be looking to learn more and improve your use of it.

N – NEVER

N – Never rely solely on AI; human judgment is crucial.

This means that while AI can be a very helpful tool, it’s important not to depend on it for everything. AI can analyze data and make predictions, but it doesn’t have human intuition or understanding. That’s why human judgment is still very important.

For example, let’s say you’re a doctor using an AI tool to help diagnose diseases. The AI might analyze a patient’s symptoms and suggest a diagnosis. But as a doctor, you would also consider other factors that the AI might not know about, like the patient’s overall health, lifestyle, or family history. You would use your judgment to make the final diagnosis.

Or, if you’re a manager using AI to help make hiring decisions, the AI might analyze resumes and suggest the most qualified candidates. But you would also consider things that the AI can’t, like how well a candidate might fit with the team culture, or their passion and drive. You would use your judgment to make the final hiring decision.

A – ADJUST

A – Adjust and tailor AI outputs to fit your specific needs and context.

This means that you can change the results given by AI to make them more suitable for your specific situation or needs. AI can provide a lot of information, but not all of it might be useful or relevant to you. That’s where your judgment comes in.

For example, let’s say you’re using an AI tool to help plan your travel itinerary. The AI might suggest a list of popular tourist spots, but you’re more interested in less crowded, off-the-beaten-path places. In this case, you would adjust the AI’s suggestions based on your personal preferences to create an itinerary that suits you.

Or, if you’re a business owner using AI to analyze market trends, the AI might provide a broad overview of the industry. But you’re only interested in how these trends affect your specific business. So, you would tailor the AI’s output to focus on the information that’s most relevant to you.

To achieve optimal results, refine or update your prompt, or ask follow-up questions to the AI tools.

I–INFORM

I – Inform others about responsible AI use.

This means that you should share what you know about using AI responsibly with others. This could be your friends, family, colleagues, or anyone else who might be using AI. By sharing this knowledge, you can help others use AI in a way that’s safe, fair, and beneficial.

For example, let’s say you’re a teacher who uses an AI tool to help grade student assignments. You might hold a class discussion about how the tool works, why you’re using it, and how students can use similar tools responsibly. You could also share tips for recognizing and avoiding potential issues, like data privacy concerns or AI bias.

Or, if you’re a business owner using AI to analyze customer data, you might inform your customers about how you’re using AI to improve their shopping experience, while also ensuring their data privacy and security. You could do this through a blog post, an email newsletter, or a notice on your website.

In conclusion, the “TRUST IN AI” Framework serves as a guide to ensure that AI is used responsibly and ethically, fostering trust among users and stakeholders. It emphasizes the importance of values, human impact, and trustworthiness in the development and deployment of AI systems.

This framework is instrumental in navigating the complexities and risks associated with AI, ensuring that its benefits are realized while mitigating potential harms. It’s a crucial step towards the responsible and ethical use of AI.

So, remember to TRUST IN AI when using AI responsibly.

The Future of Responsible AI

The future of responsible AI is likely to be shaped by a combination of technological advancements, ethical considerations, and regulatory frameworks. Here are some predictions and insights into how responsible AI might evolve:

Predictions for the Evolution of Responsible AI

- Technological Innovation: AI technologies will continue to advance, becoming more sophisticated and integrated into various aspects of daily life. This will necessitate the development of responsible AI practices that ensure these technologies are used ethically and sustainably.

According to a study by Grand View Research, the global AI market is projected to hit $1.81 trillion by 2030.

- Ethical AI: There will be a greater emphasis on embedding ethical principles into AI systems. This includes ensuring fairness, transparency, accountability, and respect for privacy throughout the AI lifecycle.

Recently, Microsoft’s approach to acknowledging the imperfections of AI-generated content as “usefully wrong” aligns with the commitment to transparency and accountability. As stated by Microsoft chief scientist Jaime Teevan, the company is aware that Copilot may produce errors or exhibit biases, and they have prepared mitigations for such instances.

✅ Read the full Article on CNBC

- Public-Private Collaboration: Initiatives like AI2030 highlight the importance of collaboration between the public and private sectors to foster responsible AI development. This includes shaping global AI agendas and establishing industry-specific frameworks and best practices.

✅ "AI2030 is an initiative aimed at harnessing the transformative power of AI to benefit humanity while minimizing its potential negative impact."

- Dynamic Frameworks: As AI evolves, so too will the frameworks guiding its development. These frameworks will need to be adaptable to new challenges and advancements in AI technology.

In December 2023, the Economic Advisory Council to the Prime Minister (EAC-PM) of India released a working paper titled "A Complex Adaptive System Framework to Regulate AI."

This paper discusses the dynamic nature of AI and proposes a regulatory framework based on complex adaptive system (CAS) thinking.

It outlines key principles to effectively regulate AI, including setting boundary conditions, enabling real-time monitoring, and guiding the evolution of AI systems.

The paper acknowledges the complex adaptive system characteristics of AI and emphasizes the importance of specialized, agile regulatory bodies to address the challenges posed by AI's rapid development.

This is a clear indication that official bodies are recognizing the need for dynamic and adaptable frameworks to keep pace with the advancements in AI technology.

✅Source: A Complex Adaptive System Framework to Regulate AI

Role of Policy and Regulation in Promoting Responsible AI

- Global Regulatory Landscape: Policymakers and companies are working to ensure AI serves essential economic and societal objectives while mitigating risks. This involves developing comparable and interoperable rules across jurisdictions to promote the positive use of AI.

- Governance Frameworks: There will be a push for comprehensive governance frameworks that address all stages of AI policymaking. These frameworks will aim to uphold societal values such as fairness, freedom, and sustainability.

- Encouraging Innovation: While regulation is necessary to manage the risks associated with AI, it’s also important to encourage innovation. Governments have tools besides direct regulation to ensure a future that is both innovative and protects the public.

These predictions and roles highlight the multifaceted approach needed to promote responsible AI. It’s a balance between fostering innovation and ensuring that AI technologies benefit society as a whole while minimizing potential negative impacts.

Conclusion

In this rapidly evolving digital age, AI has become a powerful tool with immense potential. However, with great power comes great responsibility. The importance of using AI responsibly cannot be overstated. It’s not just about optimizing efficiency or achieving business goals but also about ensuring fairness, transparency, and respect for user privacy.

We’ve explored the concept of AI, its common uses, and the importance of using it responsibly. We’ve also delved into practical steps and strategies for implementing responsible AI practices, along with tools and resources that can guide us on this path.

But the journey doesn’t end here. As users and creators of AI, we have a continuous role to play. We need to stay informed, keep learning, and adapt as AI technology evolves. Most importantly, we need to put what we have learned into practice.

Let’s commit to using AI responsibly. Let’s strive to create an AI-driven world that is not just efficient and innovative, but also ethical and fair. Remember, the future of AI is in our hands.

Related Posts: